How To Install Hadoop On Ubuntu Step By Step

Hadoop is a free, open-source, and Java-based software framework used for the storage and processing of large datasets on clusters of machines. Information technology uses HDFS to shop its data and process these data using MapReduce. It is an ecosystem of Big Data tools that are primarily used for data mining and machine learning.

Apache Hadoop 3.3 comes with noticeable improvements and many issues fixes over the previous releases. It has iv major components such equally Hadoop Common, HDFS, YARN, and MapReduce.

This tutorial volition explain y'all to how to install and configure Apache Hadoop on Ubuntu 20.04 LTS Linux organization.

Step 1 – Installing Java

Hadoop is written in Java and supports merely Java version 8. Hadoop version 3.3 and latest likewise back up Java 11 runtime likewise as Java 8.

You can install OpenJDK 11 from the default apt repositories:

sudo apt updatesudo apt install openjdk-eleven-jdk

One time installed, verify the installed version of Java with the post-obit command:

java -version You should get the following output:

openjdk version "xi.0.11" 2022-04-20 OpenJDK Runtime Environment (build 11.0.eleven+9-Ubuntu-0ubuntu2.20.04) OpenJDK 64-Bit Server VM (build 11.0.11+9-Ubuntu-0ubuntu2.20.04, mixed manner, sharing)

Step 2 – Create a Hadoop User

It is a good idea to create a separate user to run Hadoop for security reasons.

Run the post-obit control to create a new user with name hadoop:

sudo adduser hadoop Provide and confirm the new password as shown below:

Calculation user `hadoop' ... Adding new group `hadoop' (1002) ... Calculation new user `hadoop' (1002) with group `hadoop' ... Creating home directory `/abode/hadoop' ... Copying files from `/etc/skel' ... New password: Retype new password: passwd: countersign updated successfully Changing the user information for hadoop Enter the new value, or printing ENTER for the default Full Name []: Room Number []: Work Telephone []: Home Phone []: Other []: Is the information correct? [Y/n] y

Step 3 – Configure SSH Key-based Authentication

Adjacent, y'all will need to configure passwordless SSH authentication for the local system.

Offset, change the user to hadoop with the following command:

su - hadoop Next, run the following control to generate Public and Private Key Pairs:

ssh-keygen -t rsa Y'all will be asked to enter the filename. Just printing Enter to consummate the process:

Generating public/individual rsa key pair. Enter file in which to save the key (/abode/hadoop/.ssh/id_rsa): Created directory '/home/hadoop/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/hadoop/.ssh/id_rsa Your public primal has been saved in /home/hadoop/.ssh/id_rsa.pub The key fingerprint is: SHA256:QSa2syeISwP0hD+UXxxi0j9MSOrjKDGIbkfbM3ejyIk [email protected] The key's randomart image is: +---[RSA 3072]----+ | ..o++=.+ | |..oo++.O | |. oo. B . | |o..+ o * . | |= ++o o S | |.++o+ o | |.+.+ + . o | |o . o * o . | | E + . | +----[SHA256]-----+

Adjacent, append the generated public keys from id_rsa.pub to authorized_keys and set proper permission:

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keyschmod 640 ~/.ssh/authorized_keys

Next, verify the passwordless SSH hallmark with the following command:

ssh localhost Yous will be asked to authenticate hosts by adding RSA keys to known hosts. Blazon yes and hit Enter to authenticate the localhost:

The actuality of host 'localhost (127.0.0.1)' can't exist established. ECDSA cardinal fingerprint is SHA256:JFqDVbM3zTPhUPgD5oMJ4ClviH6tzIRZ2GD3BdNqGMQ. Are you sure you want to go along connecting (yes/no/[fingerprint])? yes

Step 4 – Installing Hadoop

Commencement, change the user to hadoop with the following control:

su - hadoop Side by side, download the latest version of Hadoop using the wget command:

wget https://downloads.apache.org/hadoop/common/hadoop-3.iii.0/hadoop-three.3.0.tar.gz Once downloaded, excerpt the downloaded file:

tar -xvzf hadoop-3.3.0.tar.gz Next, rename the extracted directory to hadoop:

mv hadoop-3.3.0 hadoop Next, you will need to configure Hadoop and Coffee Environment Variables on your organization.

Open the ~/.bashrc file in your favorite text editor:

nano ~/.bashrc Suspend the below lines to file. You can find JAVA_HOME location by running dirname $(dirname $(readlink -f $(which java))) command on final.

consign JAVA_HOME=/usr/lib/jvm/java-eleven-openjdk-amd64 export HADOOP_HOME=/home/hadoop/hadoop export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME consign HADOOP_HDFS_HOME=$HADOOP_HOME export HADOOP_YARN_HOME=$HADOOP_HOME consign HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native" Save and close the file. Then, activate the environment variables with the following command:

source ~/.bashrc Next, open up the Hadoop surround variable file:

nano $HADOOP_HOME/etc/hadoop/hadoop-env.sh Again gear up the JAVA_HOME in hadoop environemnt.

export JAVA_HOME=/usr/lib/jvm/java-eleven-openjdk-amd64 Relieve and close the file when yous are finished.

Step five - Configuring Hadoop

Get-go, y'all volition need to create the namenode and datanode directories inside Hadoop dwelling directory:

Run the following control to create both directories:

mkdir -p ~/hadoopdata/hdfs/namenode mkdir -p ~/hadoopdata/hdfs/datanode Next, edit the cadre-site.xml file and update with your arrangement hostname:

nano $HADOOP_HOME/etc/hadoop/core-site.xml Modify the following proper noun every bit per your system hostname:

| <configuration> <property> <name> fs.defaultFS </name> <value> hdfs://hadoop.tecadmin.com:9000 </value> </holding> </configuration> |

Salve and close the file. Then, edit the hdfs-site.xml file:

nano $HADOOP_HOME/etc/hadoop/hdfs-site.xml Modify the NameNode and DataNode directory path as shown below:

| 1 2 3 4 5 half dozen 7 8 nine 10 xi 12 13 14 15 16 17 | <configuration> <belongings> <name> dfs.replication </proper noun> <value> ane </value> </property> <property> <proper noun> dfs.name.dir </name> <value> file:///home/hadoop/hadoopdata/hdfs/namenode </value> </property> <property> <name> dfs.data.dir </name> <value> file:///domicile/hadoop/hadoopdata/hdfs/datanode </value> </holding> </configuration> |

Save and close the file. And so, edit the mapred-site.xml file:

nano $HADOOP_HOME/etc/hadoop/mapred-site.xml Make the following changes:

| <configuration> <property> <proper name> mapreduce.framework.proper noun </proper noun> <value> yarn </value> </property> </configuration> |

Relieve and close the file. Then, edit the yarn-site.xml file:

nano $HADOOP_HOME/etc/hadoop/yarn-site.xml Brand the post-obit changes:

| <configuration> <belongings> <name> yarn.nodemanager.aux-services </name> <value> mapreduce_shuffle </value> </property> </configuration> |

Save and close the file when you are finished.

Step half dozen - Start Hadoop Cluster

Before starting the Hadoop cluster. You volition need to format the Namenode as a hadoop user.

Run the post-obit command to format the hadoop Namenode:

hdfs namenode -format You should get the following output:

2020-xi-23 10:31:51,318 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2022-11-23 10:31:51,323 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown. 2022-xi-23 10:31:51,323 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at hadoop.tecadmin.net/127.0.1.i ************************************************************/ After formatting the Namenode, run the following control to start the hadoop cluster:

start-dfs.sh Once the HDFS started successfully, yous should go the following output:

Starting namenodes on [hadoop.tecadmin.com] hadoop.tecadmin.com: Warning: Permanently added 'hadoop.tecadmin.com,fe80::200:2dff:fe3a:26ca%eth0' (ECDSA) to the list of known hosts. Starting datanodes Starting secondary namenodes [hadoop.tecadmin.com] Side by side, start the YARN service as shown below:

start-yarn.sh You should become the following output:

Starting resourcemanager Starting nodemanagers You can now check the status of all Hadoop services using the jps command:

jps You should see all the running services in the following output:

18194 NameNode 18822 NodeManager 17911 SecondaryNameNode 17720 DataNode 18669 ResourceManager 19151 Jps Pace 7 - Adjust Firewall

Hadoop is now started and listening on port 9870 and 8088. Next, you will need to permit these ports through the firewall.

Run the following command to allow Hadoop connections through the firewall:

firewall-cmd --permanent --add-port=9870/tcp firewall-cmd --permanent --add-port=8088/tcp Next, reload the firewalld service to apply the changes:

firewall-cmd --reload Stride viii - Access Hadoop Namenode and Resource Manager

To access the Namenode, open your web browser and visit the URL http://your-server-ip:9870. You should see the following screen:

http://hadoop.tecadmin.net:9870

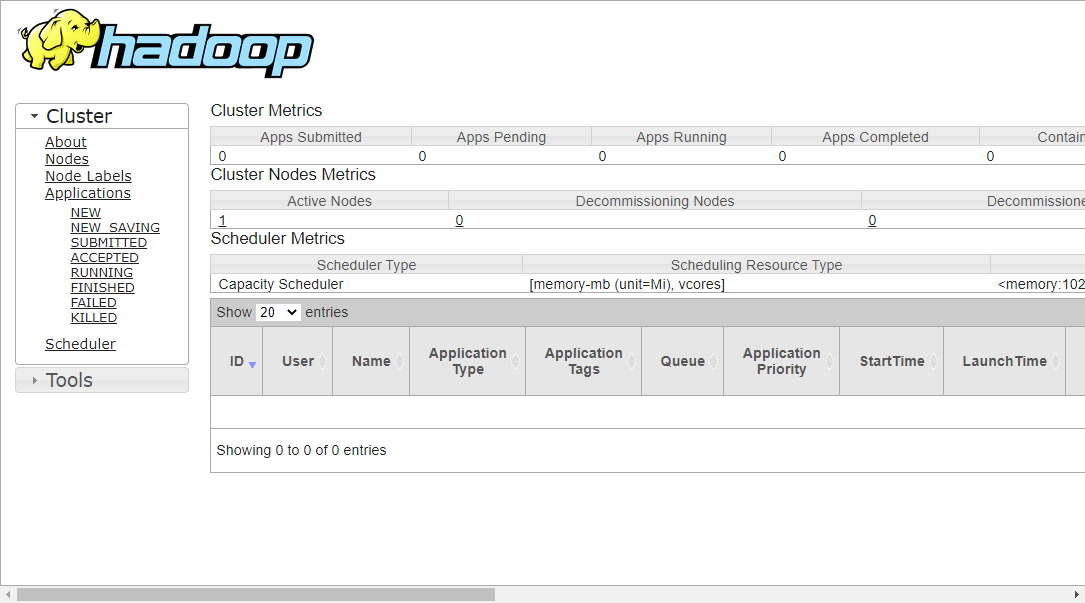

To access the Resource Manage, open your web browser and visit the URL http://your-server-ip:8088. You should encounter the following screen:

http://hadoop.tecadmin.cyberspace:8088

Step 9 - Verify the Hadoop Cluster

At this bespeak, the Hadoop cluster is installed and configured. Side by side, we volition create some directories in HDFS filesystem to test the Hadoop.

Let's create some directory in the HDFS filesystem using the post-obit command:

hdfs dfs -mkdir /test1 hdfs dfs -mkdir /logs Next, run the following command to list the to a higher place directory:

hdfs dfs -ls / Y'all should become the following output:

Establish 3 items drwxr-xr-x - hadoop supergroup 0 2022-eleven-23 10:56 /logs drwxr-xr-x - hadoop supergroup 0 2022-11-23 10:51 /test1 Too, put some files to hadoop file system. For the example, putting log files from host car to hadoop file system.

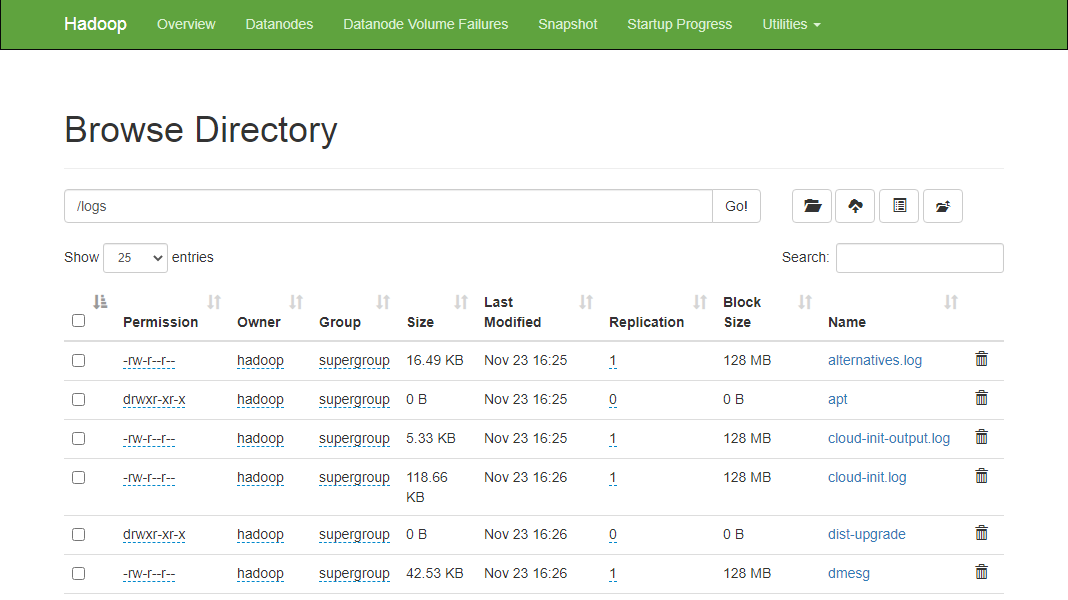

hdfs dfs -put /var/log/* /logs/ You tin can also verify the to a higher place files and directory in the Hadoop Namenode web interface.

Go to the Namenode web interface, click on the Utilities => Browse the file arrangement. You should encounter your directories which you have created earlier in the following screen:

http://hadoop.tecadmin.net:9870/explorer.html

Pace ten - End Hadoop Cluster

You can also stop the Hadoop Namenode and Yarn service any time by running the finish-dfs.sh and terminate-yarn.sh script equally a Hadoop user.

To stop the Hadoop Namenode service, run the following command as a hadoop user:

end-dfs.sh To finish the Hadoop Resources Manager service, run the post-obit command:

cease-yarn.sh Determination

This tutorial explained you step past footstep tutorial to install and configure Hadoop on Ubuntu 20.04 Linux system.

Source: https://tecadmin.net/install-hadoop-on-ubuntu-20-04/

Posted by: gonzalesmoseng.blogspot.com

0 Response to "How To Install Hadoop On Ubuntu Step By Step"

Post a Comment